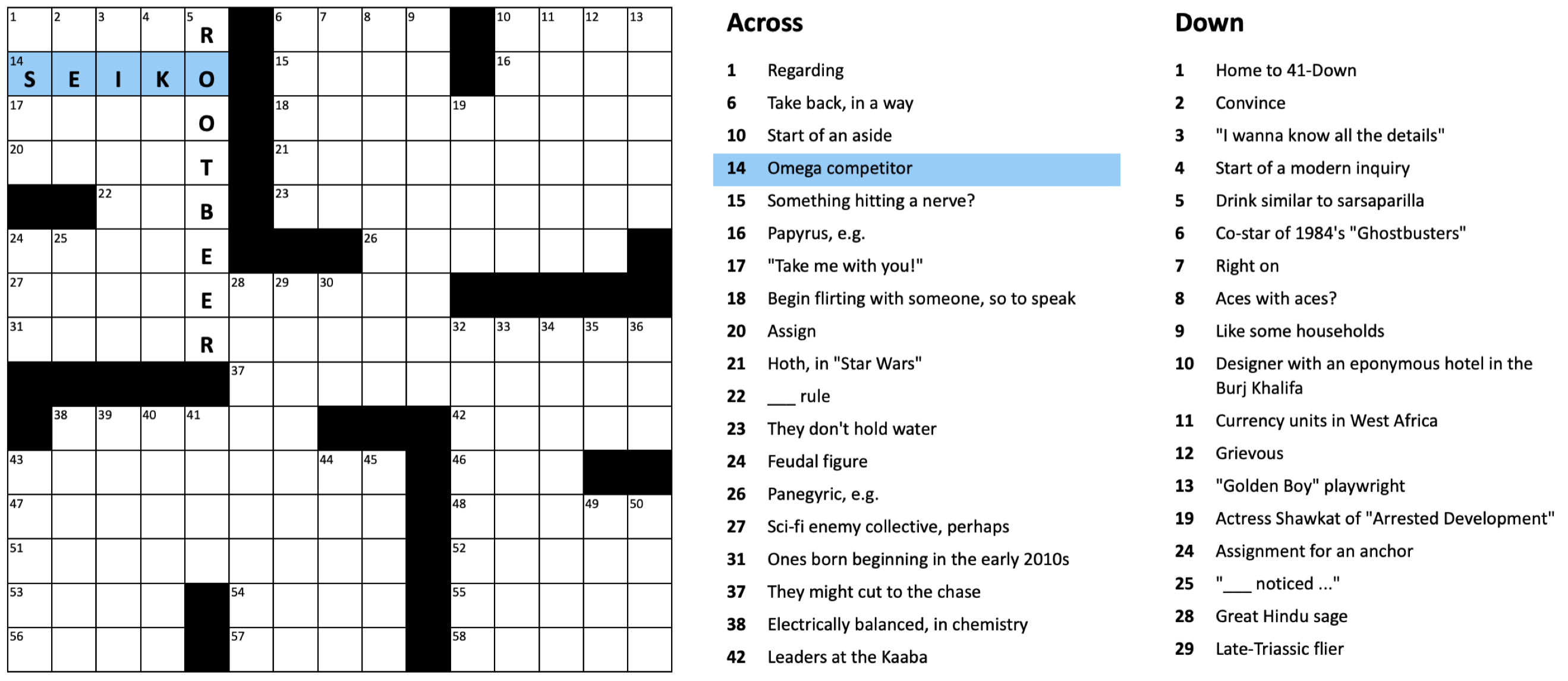

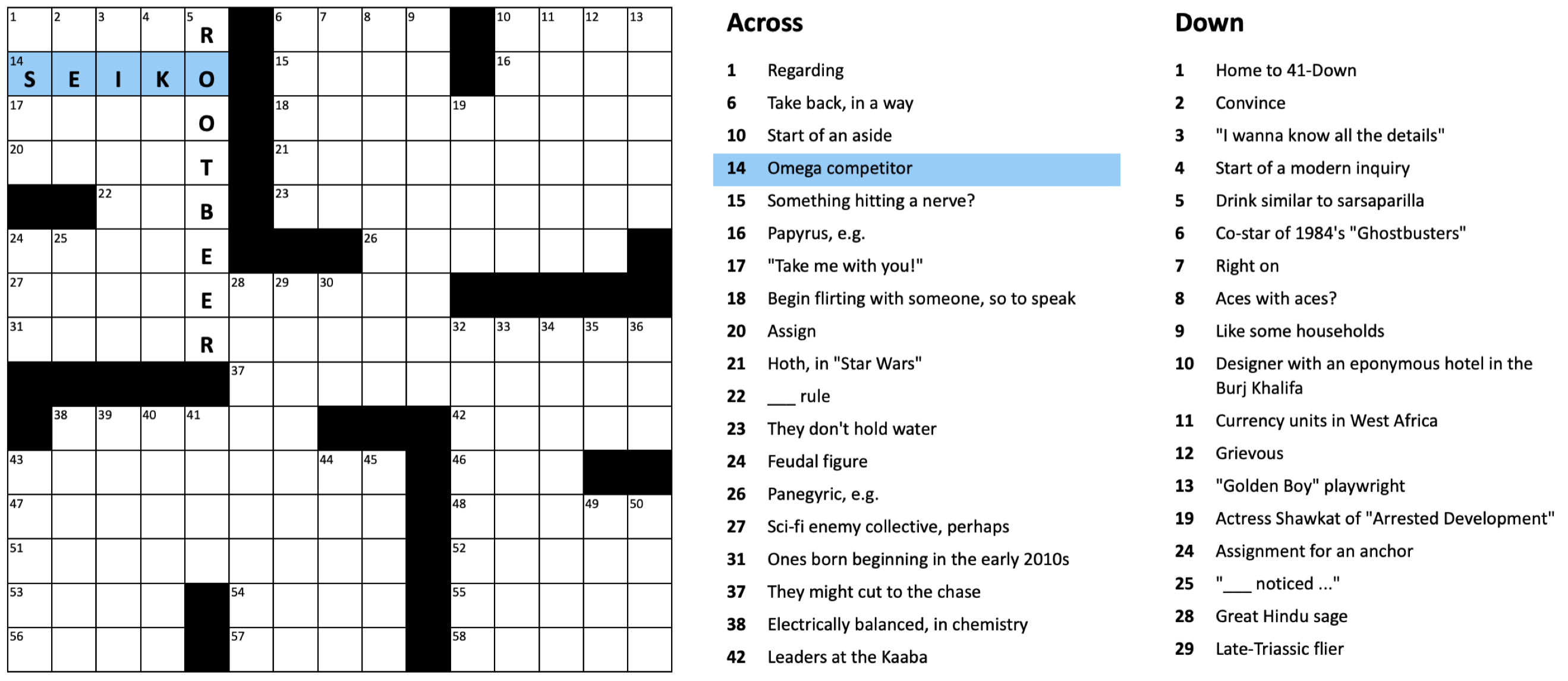

Figure 1: CoarsenConf architecture.

Molecular conformer generation is a fundamental task in computational chemistry. The objective is to predict stable low-energy 3D molecular structures, known as conformers, given the 2D molecule. Accurate molecular conformations are crucial for various applications that depend on precise spatial and geometric qualities, including drug discovery and protein docking.

We introduce CoarsenConf, an SE(3)-equivariant hierarchical variational autoencoder (VAE) that pools information from fine-grain atomic coordinates to a coarse-grain subgraph level representation for efficient autoregressive conformer generation.

Continue

TL;DR: Text Prompt -> LLM -> Intermediate Representation (such as an image layout) -> Stable Diffusion -> Image.

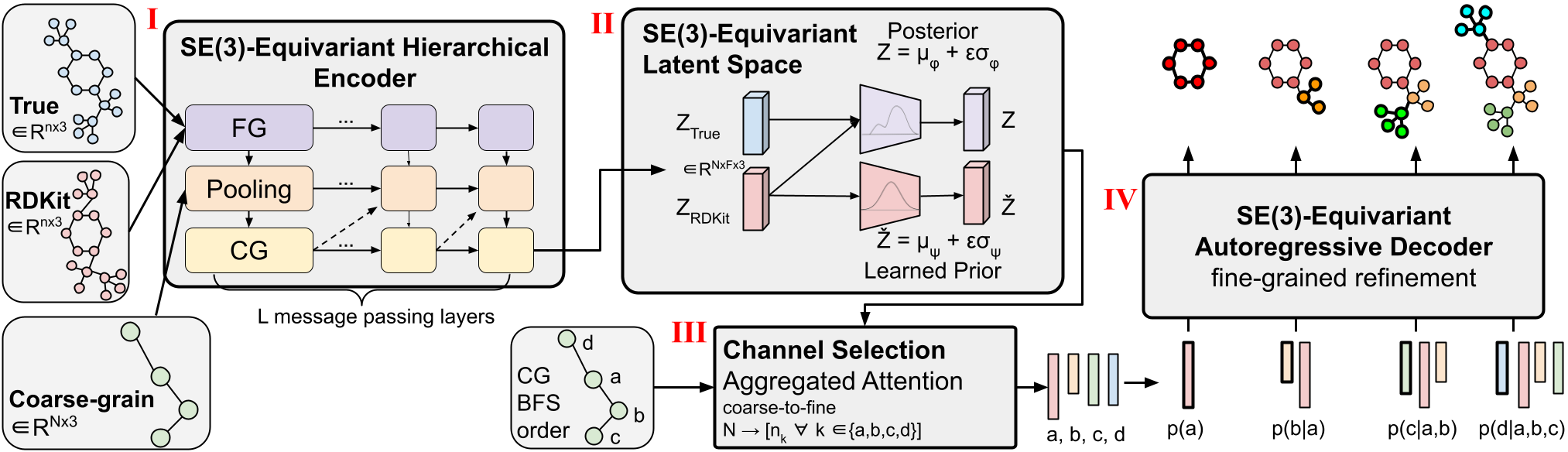

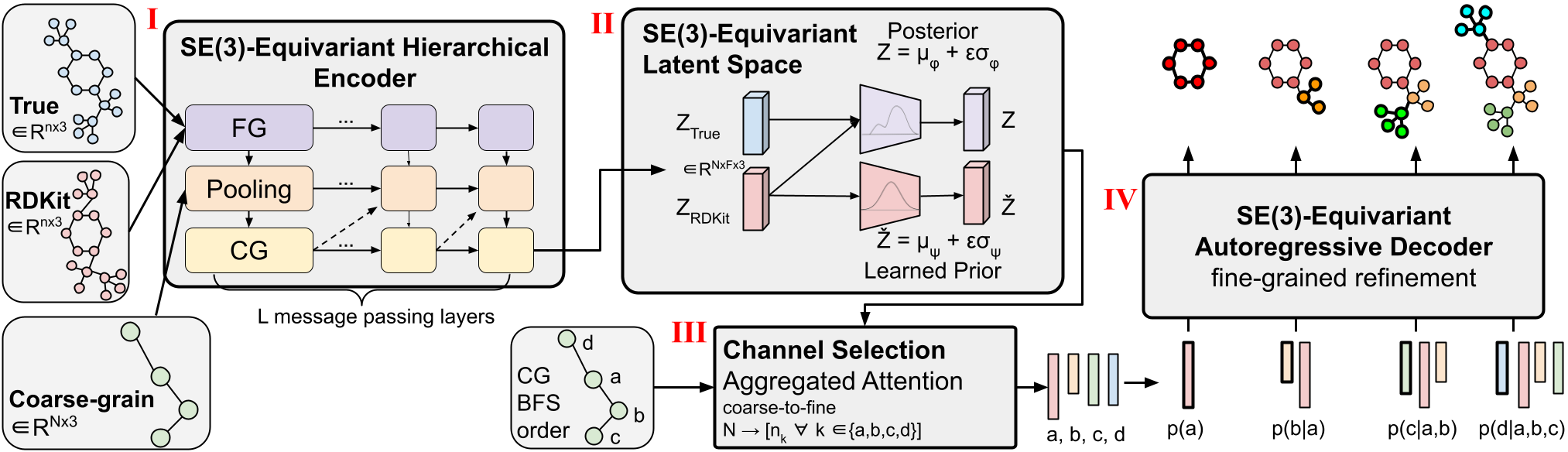

Recent advancements in text-to-image generation with diffusion models have yielded remarkable results synthesizing highly realistic and diverse images. However, despite their impressive capabilities, diffusion models, such as Stable Diffusion, often struggle to accurately follow the prompts when spatial or common sense reasoning is required.

The following figure lists four scenarios in which Stable Diffusion falls short in generating images that accurately correspond to the given prompts, namely negation, numeracy, and attribute assignment, spatial relationships. In contrast, our method, LLM-grounded Diffusion (LMD), delivers much better prompt understanding in text-to-image generation in those scenarios.

Figure 1: LLM-grounded Diffusion enhances the prompt understanding ability of text-to-image diffusion models.

Figure 1: LLM-grounded Diffusion enhances the prompt understanding ability of text-to-image diffusion models.

Continue

Figure 1: “Interactive Fleet Learning” (IFL) refers to robot fleets in industry and academia that fall back on human teleoperators when necessary and continually learn from them over time.

In the last few years we have seen an exciting development in robotics and artificial intelligence: large fleets of robots have left the lab and entered the real world. Waymo, for example, has over 700 self-driving cars operating in Phoenix and San Francisco and is currently expanding to Los Angeles. Other industrial deployments of robot fleets include applications like e-commerce order fulfillment at Amazon and Ambi Robotics as well as food delivery at Nuro and Kiwibot.

Continue

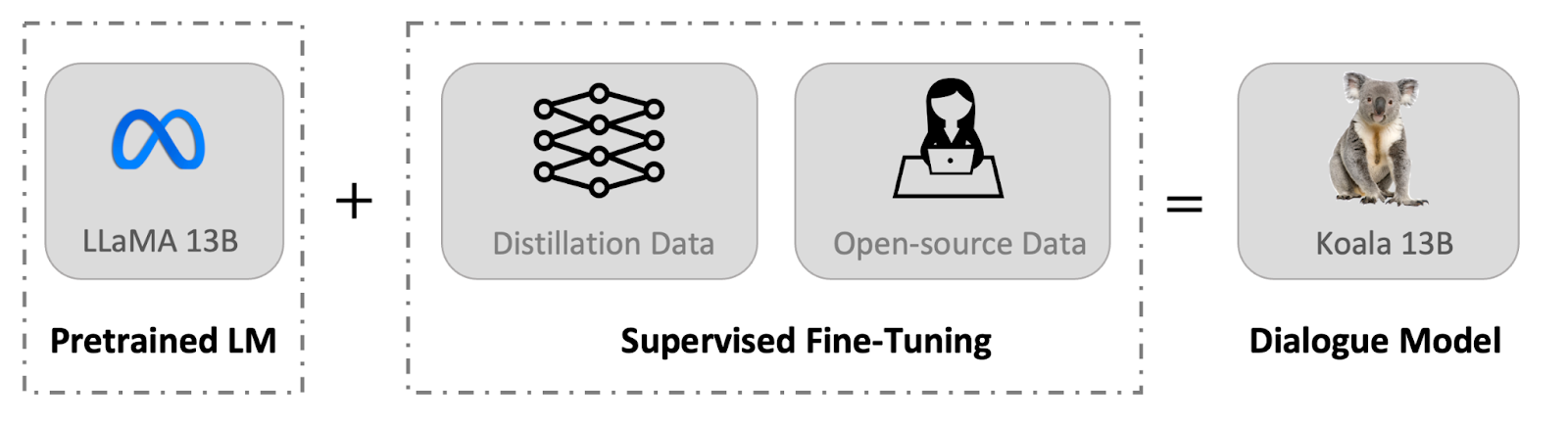

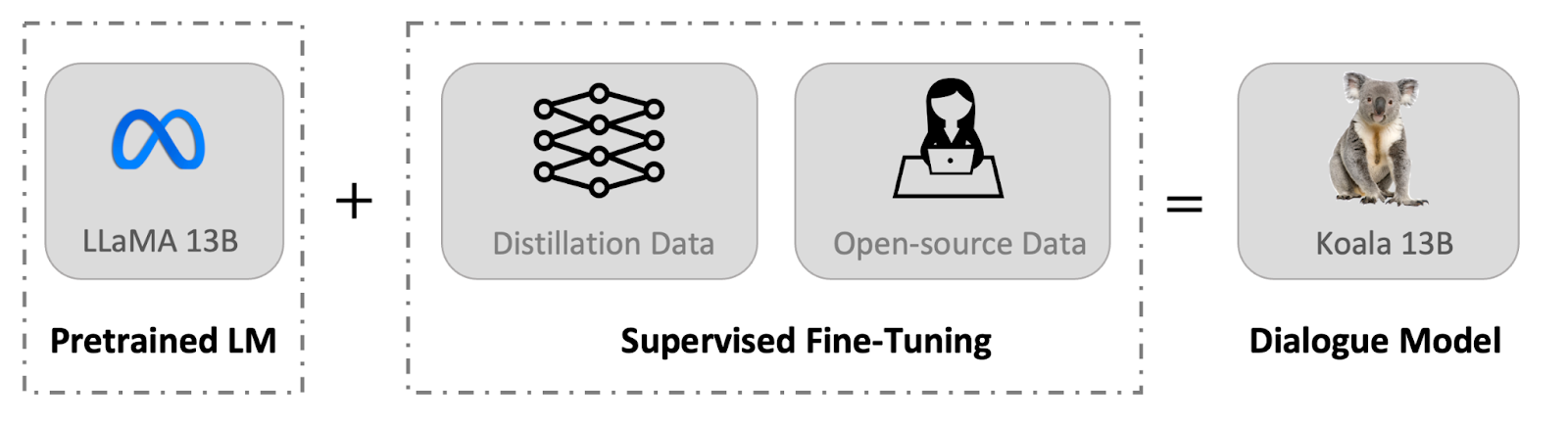

In this post, we introduce Koala, a chatbot trained by fine-tuning Meta’s LLaMA on dialogue data gathered from the web. We describe the dataset curation and training process of our model, and also present the results of a user study that compares our model to ChatGPT and Stanford’s Alpaca. Our results show that Koala can effectively respond to a variety of user queries, generating responses that are often preferred over Alpaca, and at least tied with ChatGPT in over half of the cases.

We hope that these results contribute further to the discourse around the relative performance of large closed-source models to smaller public models. In particular, it suggests that models that are small enough to be run locally can capture much of the performance of their larger cousins if trained on carefully sourced data. This might imply, for example, that the community should put more effort into curating high-quality datasets, as this might do more to enable safer, more factual, and more capable models than simply increasing the size of existing systems. We emphasize that Koala is a research prototype, and while we hope that its release will provide a valuable community resource, it still has major shortcomings in terms of content, safety, and reliability, and should not be used outside of research.

Continue

Reinforcement learning provides a conceptual framework for autonomous agents to learn from experience, analogously to how one might train a pet with treats. But practical applications of reinforcement learning are often far from natural: instead of using RL to learn through trial and error by actually attempting the desired task, typical RL applications use a separate (usually simulated) training phase. For example, AlphaGo did not learn to play Go by competing against thousands of humans, but rather by playing against itself in simulation. While this kind of simulated training is appealing for games where the rules are perfectly known, applying this to real world domains such as robotics can require a range of complex approaches, such as the use of simulated data, or instrumenting real-world environments in various ways to make training feasible under laboratory conditions. Can we instead devise reinforcement learning systems for robots that allow them to learn directly “on-the-job”, while performing the task that they are required to do? In this blog post, we will discuss ReLMM, a system that we developed that learns to clean up a room directly with a real robot via continual learning.

We evaluate our method on different tasks that range in difficulty. The top-left task has uniform white blobs to pickup with no obstacles, while other rooms have objects of diverse shapes and colors, obstacles that increase navigation difficulty and obscure the objects and patterned rugs that make it difficult to see the objects against the ground.

Continue

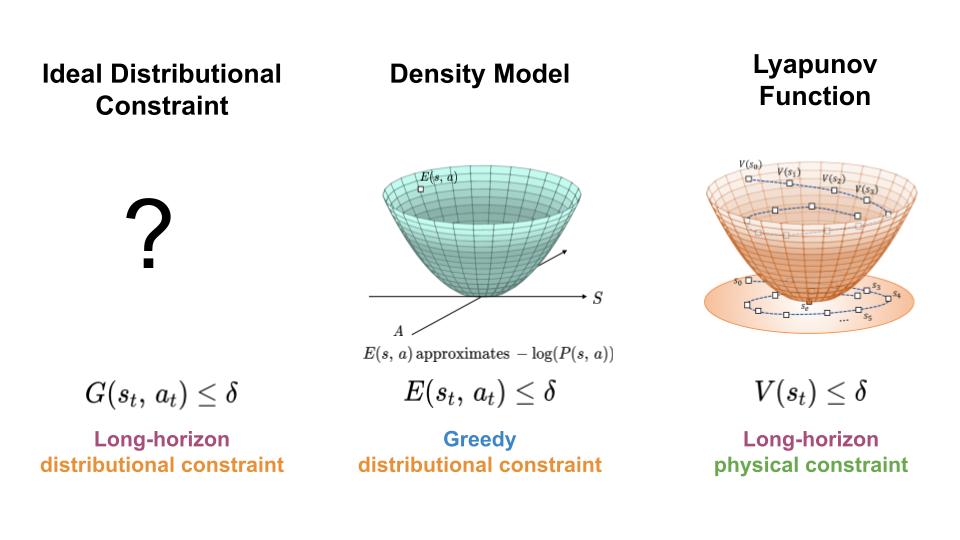

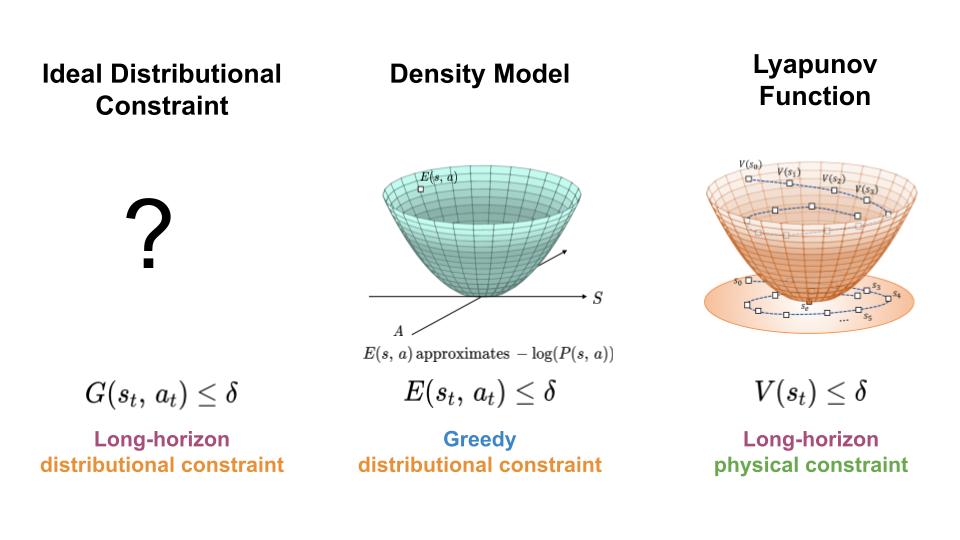

To regulate the distribution shift experience by learning-based controllers, we seek a mechanism for constraining the agent to regions of high data density throughout its trajectory (left). Here, we present an approach which achieves this goal by combining features of density models (middle) and Lyapunov functions (right).

In order to make use of machine learning and reinforcement learning in controlling real world systems, we must design algorithms which not only achieve good performance, but also interact with the system in a safe and reliable manner. Most prior work on safety-critical control focuses on maintaining the safety of the physical system, e.g. avoiding falling over for legged robots, or colliding into obstacles for autonomous vehicles. However, for learning-based controllers, there is another source of safety concern: because machine learning models are only optimized to output correct predictions on the training data, they are prone to outputting erroneous predictions when evaluated on out-of-distribution inputs. Thus, if an agent visits a state or takes an action that is very different from those in the training data, a learning-enabled controller may “exploit” the inaccuracies in its learned component and output actions that are suboptimal or even dangerous.

Continue

Deep neural networks have enabled technological wonders ranging from voice recognition to machine transition to protein engineering, but their design and application is nonetheless notoriously unprincipled.

The development of tools and methods to guide this process is one of the grand challenges of deep learning theory.

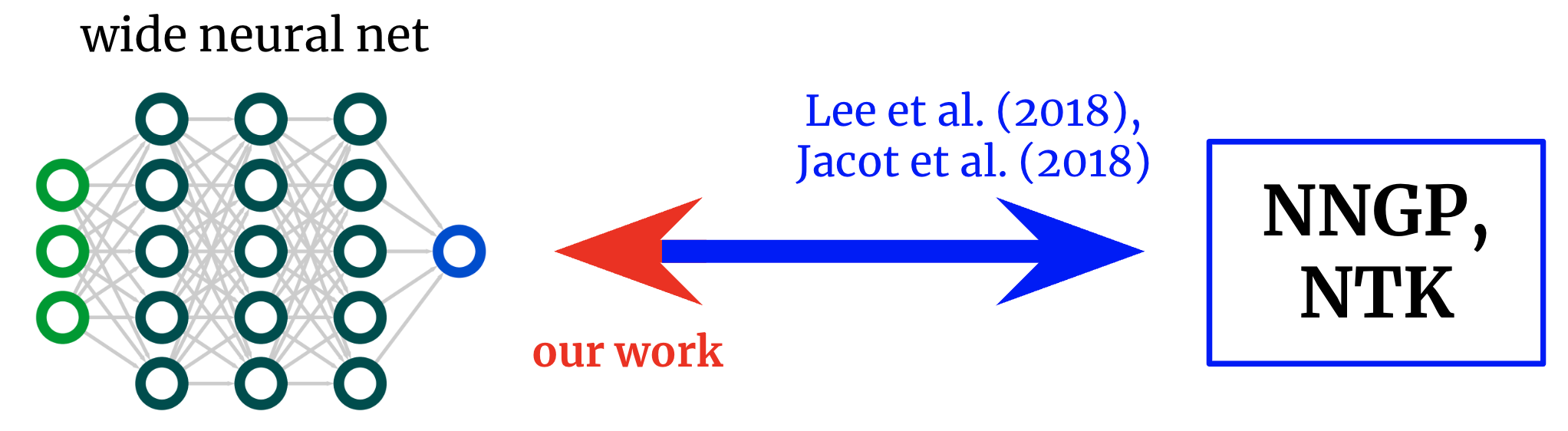

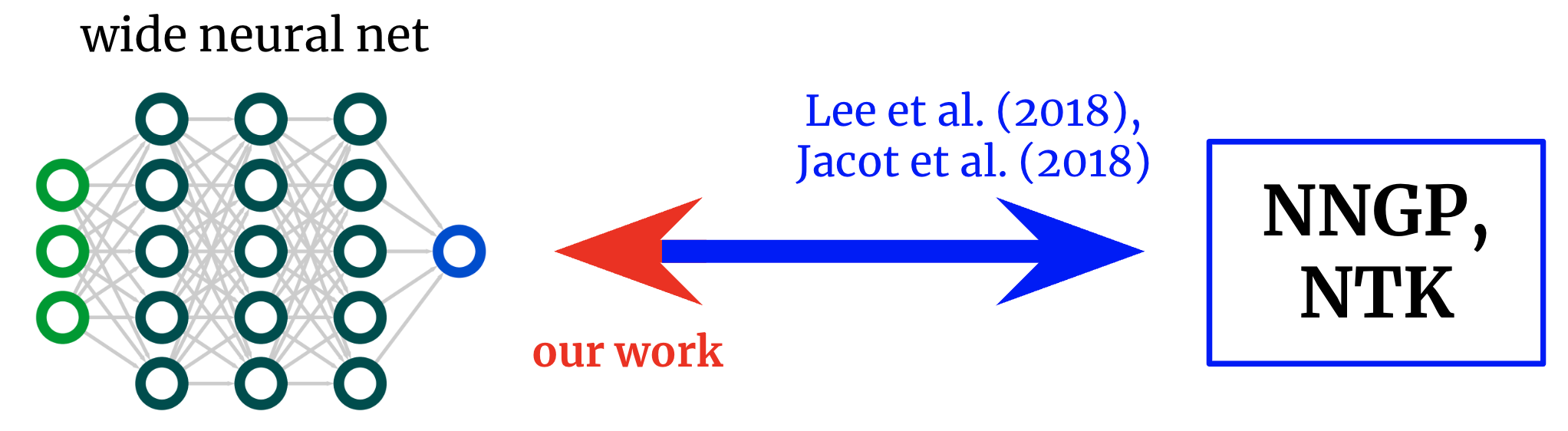

In Reverse Engineering the Neural Tangent Kernel, we propose a paradigm for bringing some principle to the art of architecture design using recent theoretical breakthroughs: first design a good kernel function – often a much easier task – and then “reverse-engineer” a net-kernel equivalence to translate the chosen kernel into a neural network.

Our main theoretical result enables the design of activation functions from first principles, and we use it to create one activation function that mimics deep \(\textrm{ReLU}\) network performance with just one hidden layer and another that soundly outperforms deep \(\textrm{ReLU}\) networks on a synthetic task.

Kernels back to networks. Foundational works derived formulae that map from wide neural networks to their corresponding kernels. We obtain an inverse mapping, permitting us to start from a desired kernel and turn it back into a network architecture.

Continue

Wei Fu, Chao Yu, Zelai Xu, Jiaqi Yang, Yi Wu

Jul 10, 2022

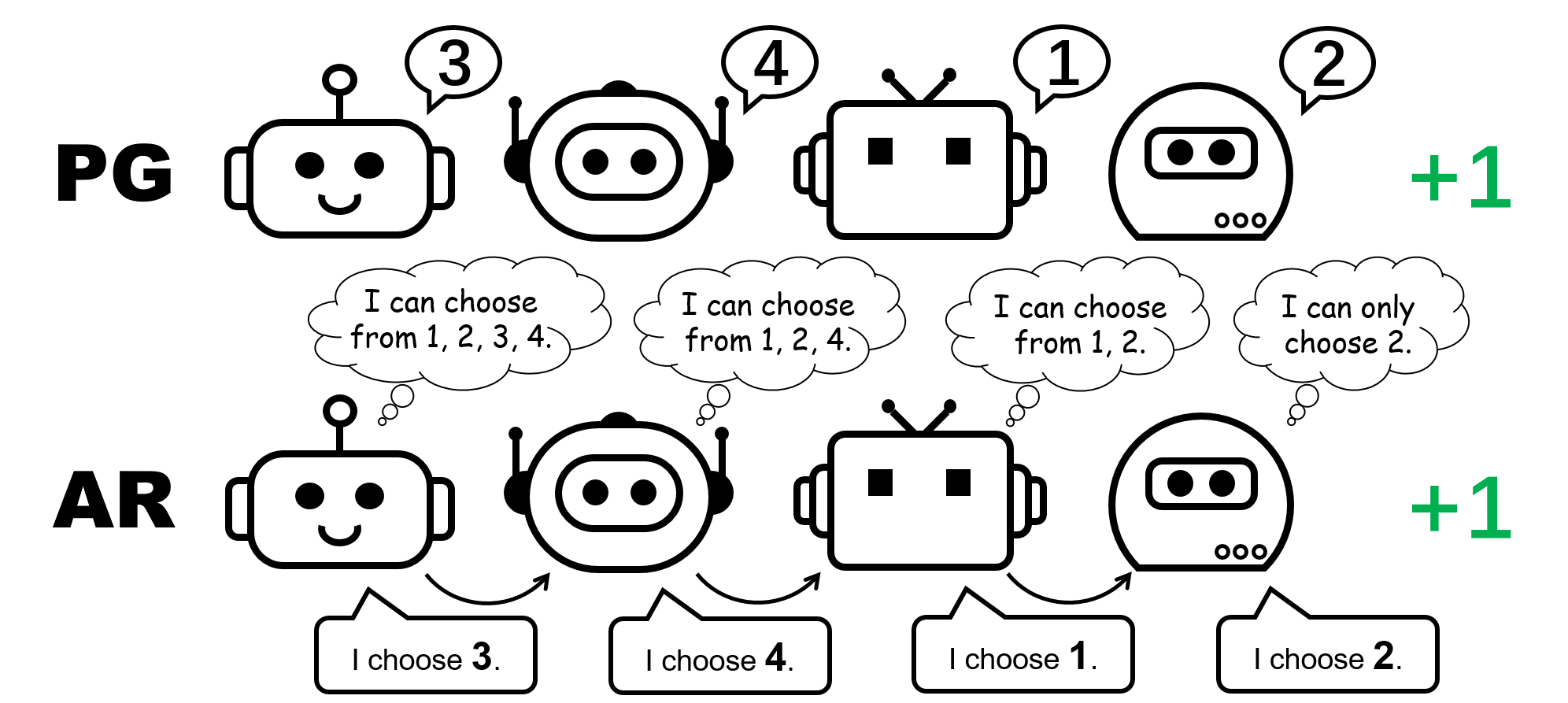

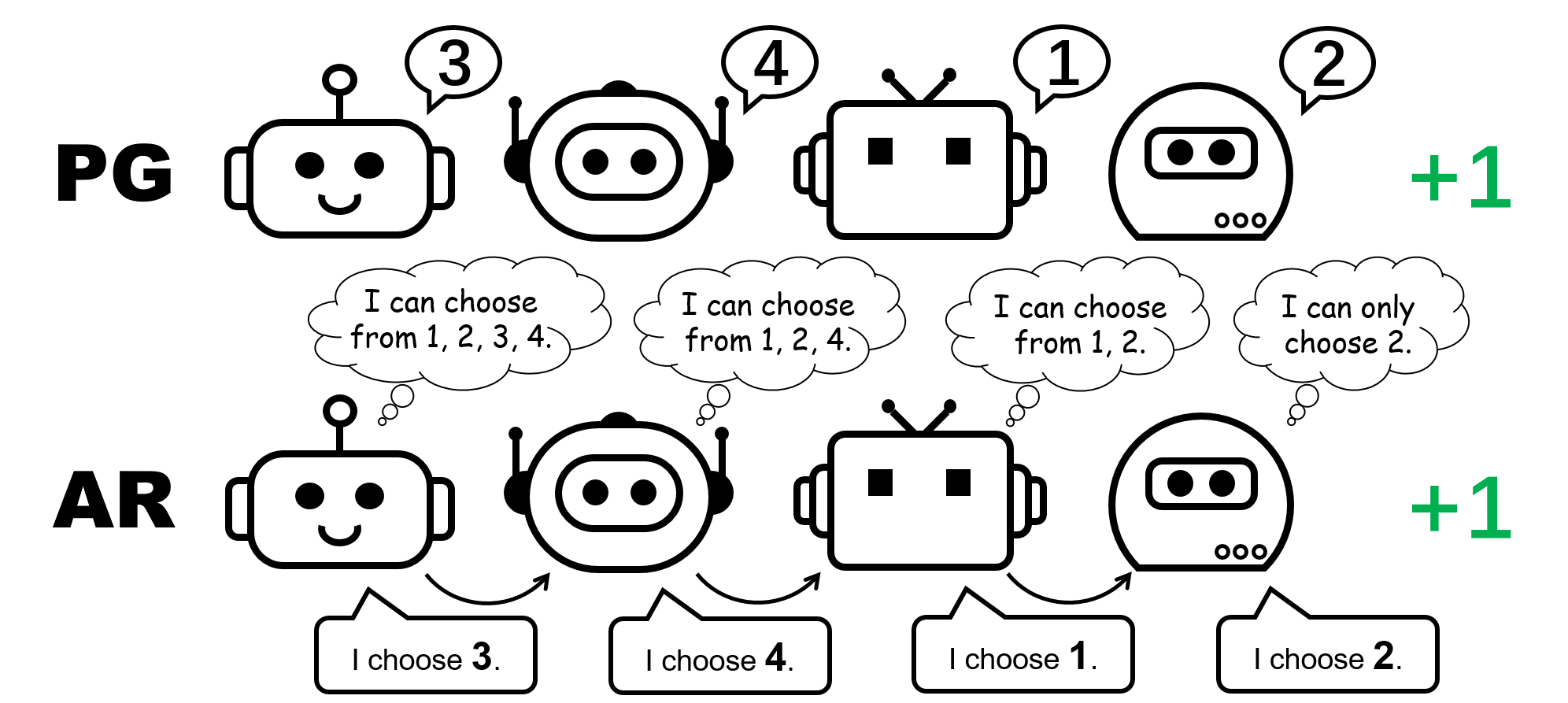

In cooperative multi-agent reinforcement learning (MARL), due to its on-policy nature, policy gradient (PG) methods are typically believed to be less sample efficient than value decomposition (VD) methods, which are off-policy. However, some recent empirical studies demonstrate that with proper input representation and hyper-parameter tuning, multi-agent PG can achieve surprisingly strong performance compared to off-policy VD methods.

Why could PG methods work so well? In this post, we will present concrete analysis to show that in certain scenarios, e.g., environments with a highly multi-modal reward landscape, VD can be problematic and lead to undesired outcomes. By contrast, PG methods with individual policies can converge to an optimal policy in these cases. In addition, PG methods with auto-regressive (AR) policies can learn multi-modal policies.

Figure 1: different policy representation for the 4-player permutation game.

Continue

FIGS (Fast Interpretable Greedy-tree Sums): A method for building interpretable models by simultaneously growing an ensemble of decision trees in competition with one another.

Recent machine-learning advances have led to increasingly complex predictive models, often at the cost of interpretability. We often need interpretability, particularly in high-stakes applications such as in clinical decision-making; interpretable models help with all kinds of things, such as identifying errors, leveraging domain knowledge, and making speedy predictions.

In this blog post we’ll cover FIGS, a new method for fitting an interpretable model that takes the form of a sum of trees. Real-world experiments and theoretical results show that FIGS can effectively adapt to a wide range of structure in data, achieving state-of-the-art performance in several settings, all without sacrificing interpretability.

Continue

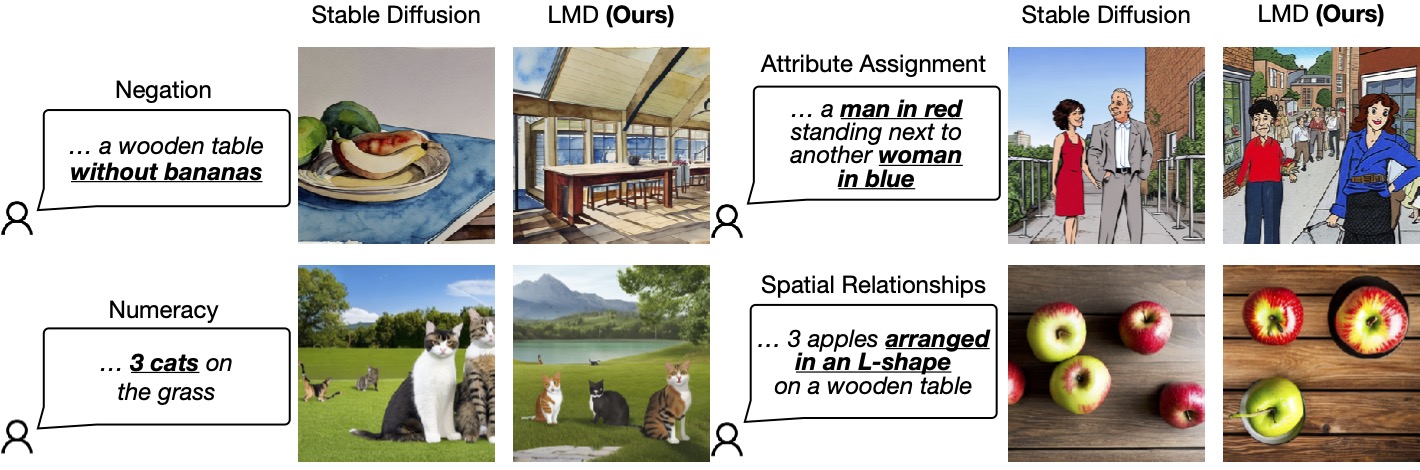

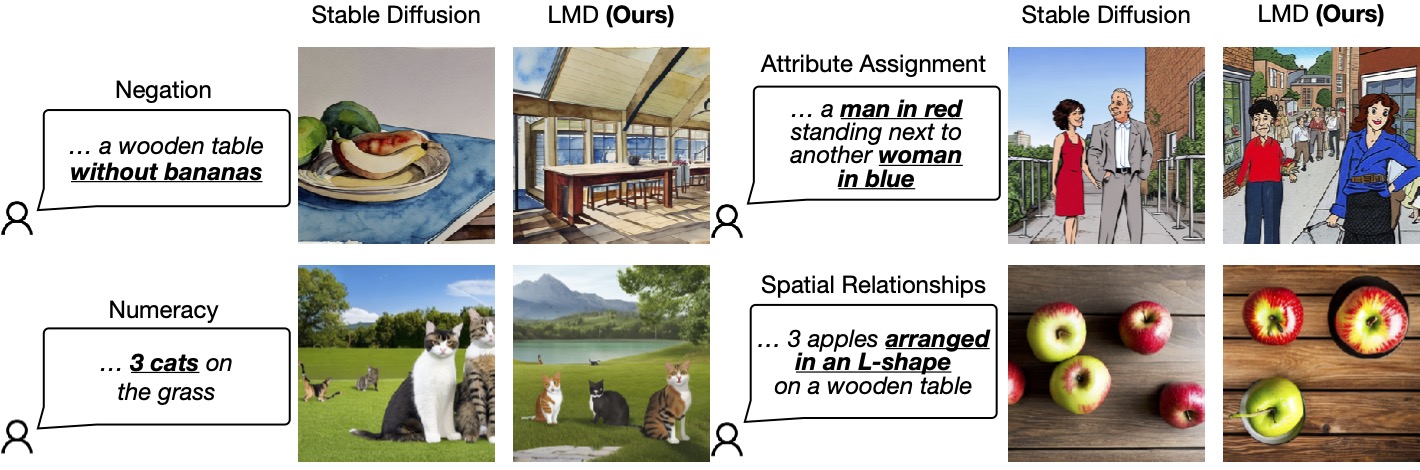

We recently published the Berkeley Crossword Solver (BCS), the current state of the art for solving American-style crossword puzzles. The BCS combines neural question answering and probabilistic inference to achieve near-perfect performance on most American-style crossword puzzles, like the one shown below:

Figure 1: Example American-style crossword puzzle

An earlier version of the BCS, in conjunction with Dr.Fill, was the first computer program to outscore all human competitors in the world’s top crossword tournament. The most recent version is the current top-performing system on crossword puzzles from The New York Times, achieving 99.7% letter accuracy (see the technical paper, web demo, and code release).

Continue

Figure 1: LLM-grounded Diffusion enhances the prompt understanding ability of text-to-image diffusion models.

Figure 1: LLM-grounded Diffusion enhances the prompt understanding ability of text-to-image diffusion models.